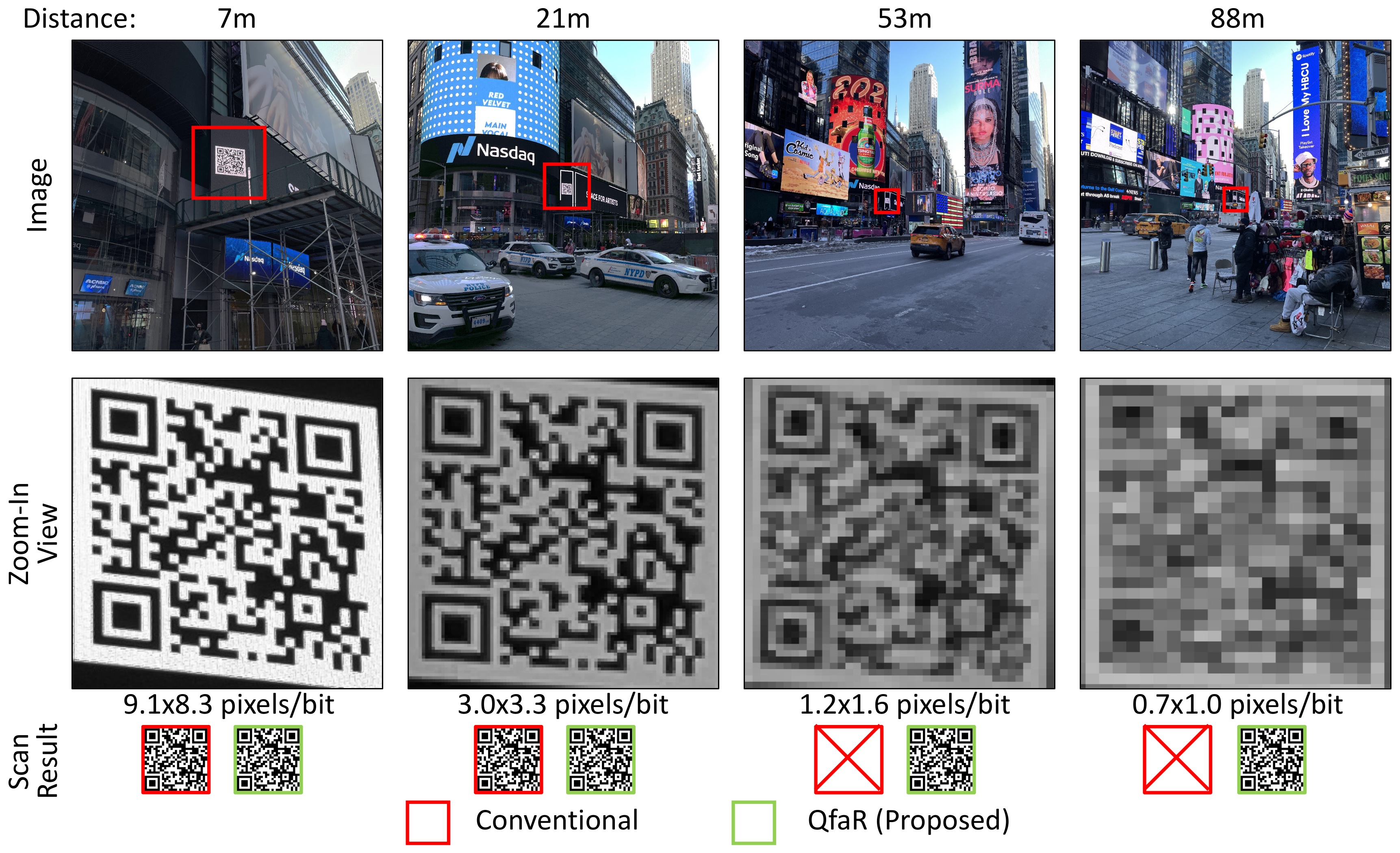

Visual codes such as QR codes provide a low-cost and convenient communication channel between physical objects and mobile devices, but typically operate when the code and the device are in close physical proximity. We propose a system, called QfaR, which enables mobile devices to scan visual codes across long distances even where the image resolution of the visual codes is extremely low. QfaR is based on location-guided code scanning, where we utilize a crowdsourced database of physical locations of codes. Our key observation is that if the approximate location of the codes and the user is known, the space of possible codes can be dramatically pruned down. Then, even if every “single bit” from the low-resolution code cannot be recovered, QfaR can still identify the visual code from the pruned list with high probability. By applying computer vision techniques, QfaR is also robust against challenging imaging conditions, such as tilt, motion blur,etc. Experimental results with common iOS and Android devices show that QfaR can significantly enhance distances at which codes can be scanned, e.g., 3.6cm-sized codes can be scanned at a distance of 7.5 meters, and 0.5msized codes at about 100 meters. QfaR has many potential applications, and beyond our diverse experiments, we also conduct a simple case study on its use for efficiently scanning QR code-based badges to estimate event attendance.

Back